Search engines are the gateway to the world’s information. Every second, millions of people type queries into Google, Bing, DuckDuckGo, and other search platforms expecting the right answer instantly. But beneath the surface, search engines perform an incredibly complex series of technical processes to decide which results appear, in what order, and why.

Whether you’re an SEO professional, a developer, a content creator, or a business owner, understanding how search engines work gives you a massive edge. It helps you optimize your site effectively, create content that ranks, fix technical issues, and understand why certain pages succeed while others fail.

This detailed technical guide breaks down exactly how search engines crawl, index, understand, evaluate, and rank web pages. You’ll also learn how AI models shape modern search, emerging ranking factors, and practical steps to optimize your entire website for visibility.

What Happens When You Type a Query?

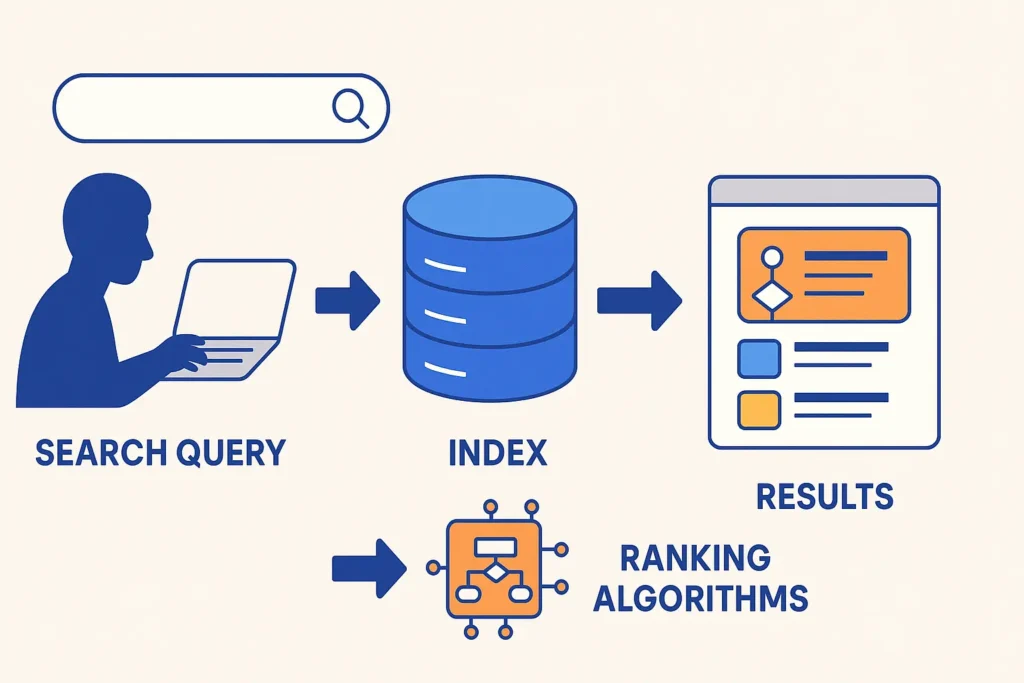

When you type a query into Google, a rapid search engine process begins. Instead of searching the entire web in real time, the search engine looks through its massive index, a database of pages it has already discovered and analyzed.

First, Google interprets your query to understand your intent. Then it scans the index, compares millions of possible results, and ranks them using hundreds of signals like relevance, quality, authority, and user experience. All of this happens in less than a second.

Understanding how search engines work matters because it helps you create sites and content that search engines can crawl, understand, and rank effectively. Without this foundation, SEO becomes guesswork.

This whole system relies on three key stages:

Crawling: bots discover your pages

Indexing: Google stores and understands your content

Ranking: algorithms decide which pages appear first

These three steps crawling indexing ranking determine everything about how your site performs in search.

What Is a Search Engine?

A search engine is a software system designed to discover, understand, and organize information from across the internet so it can deliver the most relevant results when a user enters a query. In simple terms, it’s a tool that helps you find the right information instantly but behind the scenes, it relies on complex technology and processes.

The core purpose of a search engine is to:

Collect information from billions of web pages

Store and organize that information

Deliver ranked results based on relevance, quality, and user intent

These core functions are carried out by three main search engine components:

1. Crawler (Spider or Bot)

The crawler explores the internet, scans web pages, follows links, and discovers new or updated content. It is responsible for the first step in the search engine process finding content.

2. Index

The index is a massive database where all crawled pages are processed, analyzed, and stored.

It includes information about keywords, topics, entities, links, media, and page structure. When you perform a search, the engine pulls results from this index, not the live web.

3. Ranking Algorithms

Ranking algorithms evaluate indexed pages and determine which ones best match a user’s query. They assess hundreds of factors like relevance, authority, freshness, and user experience to produce a ranked list of results on the SERP.

Difference Between Search Engines and Browsers

A common confusion is between search engines and browsers.

A search engine (like Google, Bing, or DuckDuckGo) finds and ranks information across the web.

A browser (like Chrome, Firefox, or Safari) is simply the tool you use to access and display web pages.

In short:

The search engine helps you find information.

The browser helps you view it.

This distinction is essential when understanding the full search engine definition, how the system works, and how SEO fits into the broader search ecosystem.

Here is a clear, SEO-optimized, and concise section for your topic on crawling:

Crawling: How Search Engines Discover Webpages

1. What Is Crawling?

Crawling is the first step in the search engine process, where search engine bots, also called crawlers or spiders scan the web to find new or updated pages. These bots automatically visit URLs, read the content, collect data, and follow the links they find.

Search engine bots traverse the web using links, moving from one page to another just like a person clicking around a website. Internal links help bots navigate within your site, while external links help them discover your site from others. Without proper crawling, your pages cannot be indexed or ranked.

2. How Crawlers Find New Content

Search engines use several pathways to locate fresh or updated pages:

Sitemaps

An XML sitemap is a file that lists your important URLs. It acts like a roadmap, telling bots which pages exist and how they are structured. Sitemaps improve crawling efficiency, especially for large or complex sites.

Internal Linking

Strong internal linking guides bots through your website. Pages with many internal links are crawled more frequently because bots see them as important.

Backlinks

When another website links to yours, crawlers follow that link and discover your page. Backlinks are one of the fastest ways for search engines to find new content.

Direct Submission via Search Console

Tools like Google Search Console allow you to submit URLs manually. This helps Google prioritize crawling, especially for new or recently updated pages.

3. Crawl Budget & Why It Matters

Crawl budget refers to how many pages Googlebot crawls on your site within a given timeframe. Not all sites are crawled equally Google allocates resources based on site size, health, authority, and performance.

What determines crawl frequency

Site popularity

Server performance

How often content changes

Number of errors encountered

Internal link structure

Backlink profile

High-authority and well-maintained sites get crawled more often.

Crawl budget optimization tips

Fix broken links and redirect chains

Improve site speed and server response

Keep clean, logical architecture

Use sitemaps effectively

Avoid duplicate content

Reduce unnecessary URL parameters

Optimizing your crawl budget ensures search engines find and update your pages regularly.

4. Factors That Help or Hurt Crawling

Robots.txt

Your robots.txt file tells search engine bots which pages they can or cannot crawl. A misconfigured robots.txt can accidentally block important content from being crawled.

Noindex / Nofollow

Noindex prevents a page from being added to the index.

Nofollow tells bots not to follow specific links.

Overusing these tags can limit how bots navigate your site.

Server Speed

Slow servers reduce crawl efficiency. If your pages take too long to load, bots may crawl fewer pages or abandon the crawl entirely.

Broken Links

404 errors and broken internal links interrupt the crawling process, making it harder for search engines to discover content.

JavaScript Rendering Issues

If important content loads only through JavaScript and isn’t rendered properly, bots may not see or crawl it. Ensuring server-side or dynamic rendering can solve this issue.

In summary:

Effective crawling ensures your pages can be discovered, analyzed, and considered for ranking. By optimizing links, sitemaps, crawl budget, and technical elements like robots.txt and page speed, you help search engine bots crawl your site smoothly laying the foundation for strong SEO performance.

Indexing: How Search Engines Store & Understand Content

1. What Is Indexing?

Indexing is the second stage of the search engine process. After a page is crawled, search engines analyze the content and store it in a massive database known as the index. This index contains billions of indexed pages, organized by keywords, topics, entities, and relevance.

During content processing, search engines examine:

Text

Images

Links

Metadata

Structured data

Page layout

Once processed, the page is added to the index, making it eligible to appear in search results. If a page isn’t indexed, it cannot rank no matter how valuable it is.

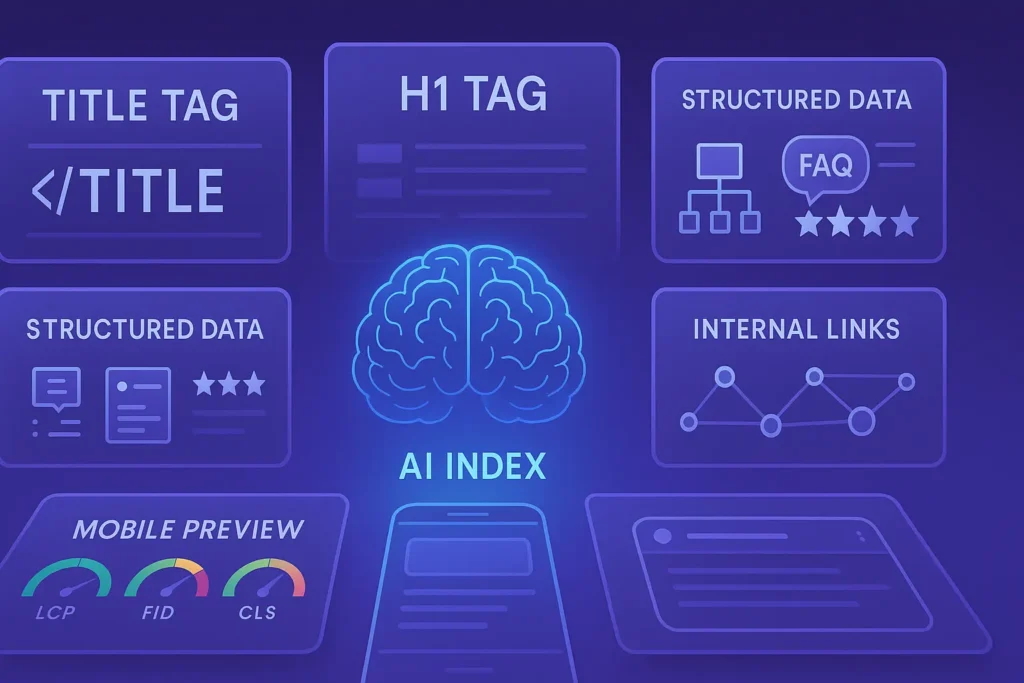

2. How Search Engines Understand Content

To properly index a page, search engines must understand what it’s about. Search engines depend on clear, structured, and valuable content to understand your page. To create content that stands out and ranks well, this guide on becoming a successful content creator shows how to produce original, helpful, and well-organized posts.

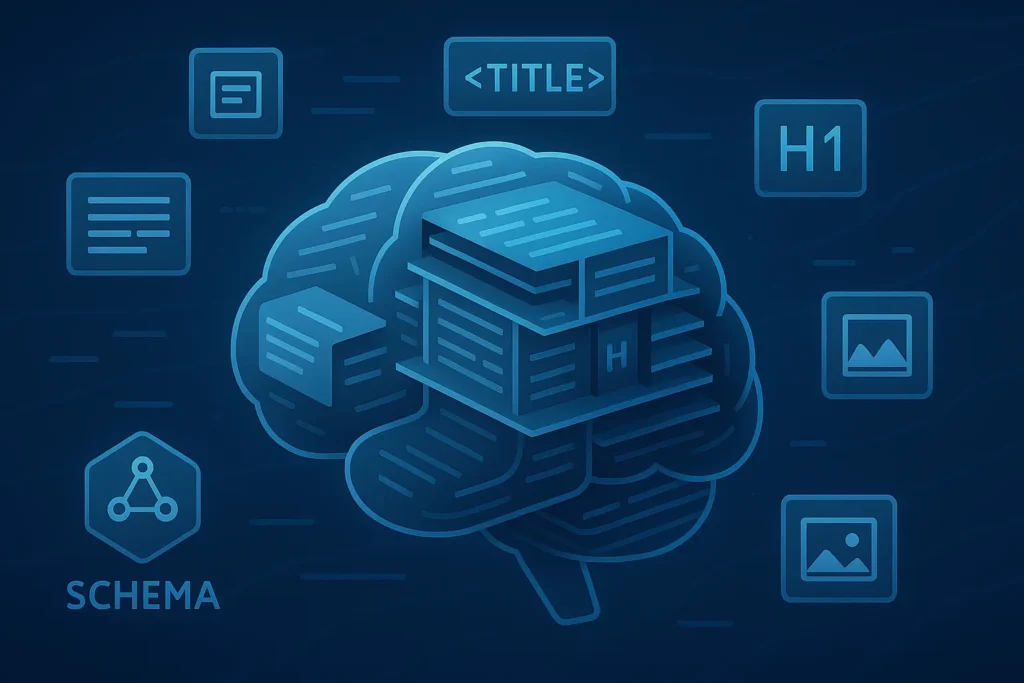

They use several methods to interpret content:

HTML Structure

Elements like:

Title tags

Header tags (H1, H2, H3…)

Alt text

Meta tags

help search engines identify the main topics and hierarchy of information.

Semantic Analysis & NLP

Modern search engines use natural language processing (NLP) to understand meaning, relationships, context, and user intent beyond simple keywords.

Entity Recognition (Knowledge Graph)

Search engines use the Knowledge Graph to identify people, places, brands, and concepts mentioned on a page. Recognizing these entities helps Google understand content on a deeper, semantic level.

Canonical Tags

If multiple pages have similar content, canonical tags help search engines choose the preferred version. This prevents duplicate content from harming indexability.

3. Why Pages Don’t Get Indexed

Several issues can prevent a crawled page from being added to the index:

Duplicate Content

Pages with identical or overlapping content may be ignored or filtered out.

Thin or Low-Quality Content

Pages with minimal text, poor value, or AI-generated filler may not qualify for indexing.

Blocked Resources

If critical files (like images, scripts, or CSS) are blocked, search engines may not fully understand the page.

Poor Site Structure

Orphan pages, messy navigation, and weak internal linking can make it difficult for crawlers to evaluate content.

Google prioritizes indexing pages that are useful, unique, and well-organized.

4. How to Improve Indexability

To increase the chances that your pages get indexed and stay indexed, focus on these areas:

Optimizing On-Page SEO

Clear titles, descriptive headers, alt text, schema markup, and clean HTML improve understanding and indexation.

Strengthening Internal Links

Internal linking helps crawlers discover and prioritize important pages, improving their visibility in the index.

Improving Content Quality

High-quality, original, in-depth content is far more likely to be indexed than thin or repeated material. Add value, structure, and clarity. High-quality, engaging content improves both indexability and rankings. Story-driven content boosts user engagement and SEO. Learn more about how storytelling elevates content marketing.

In summary:

Indexing is the critical step where search engines process your content and store it for future retrieval. By improving indexability through strong internal linking, high-quality content, and optimized HTML structure, you greatly increase the chances that your pages will be fully understood, properly indexed, and ready to compete in search results.

Ranking: How Search Engines Decide Which Pages Show First

Once a page has been crawled and indexed, the next challenge is ranking where search engines determine which pages should appear first on the results page. This stage is driven by complex algorithms that evaluate relevance, quality, authority, user experience, and intent.

1. What Is a Ranking Algorithm?

A ranking algorithm is a system of rules and calculations used to evaluate indexed content and decide which pages best answer a query. Google’s algorithms look at hundreds of ranking factors to compare pages and assign positions on the SERP.

Search engines constantly refine their systems through different types of updates:

Core Updates

Broad improvements aimed at enhancing search quality and relevance.

Spam Updates

Designed to detect manipulative SEO tactics like keyword stuffing or unnatural link building.

Helpful Content Updates

Focus on surfacing people-first content and reducing low-value, AI-generated, or unhelpful pages.

These updates reflect how seriously search engines take quality and user satisfaction.

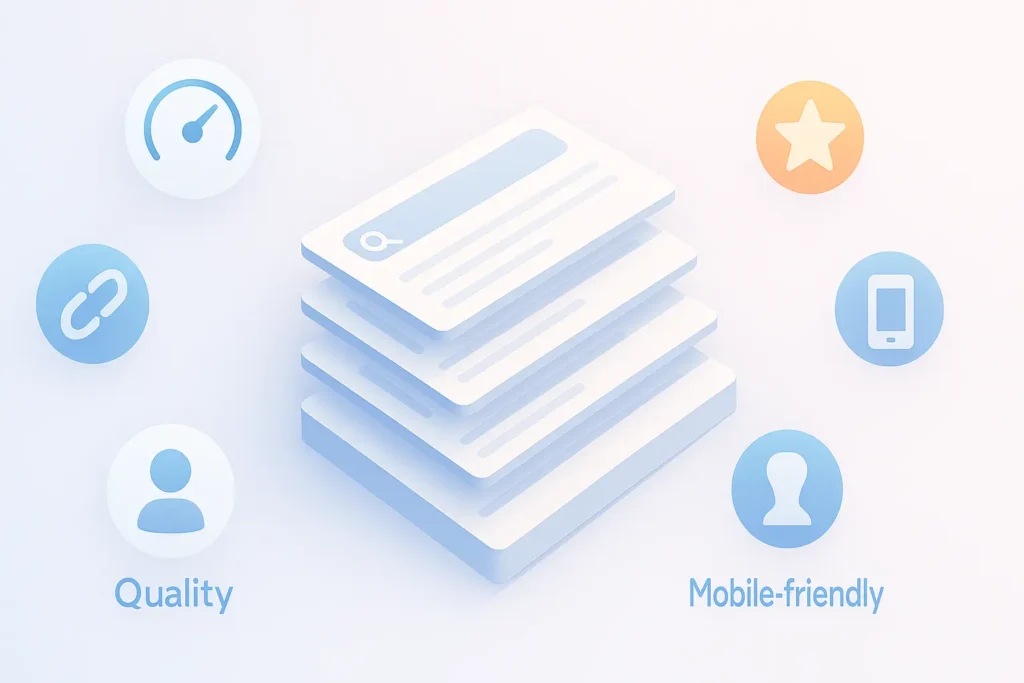

2. Key Ranking Factors (Technical, Content & User Signals)

Search engines rely on a mix of technical performance, content quality, and behavioral signals to rank pages. Ranking well requires more than technical SEO. Search engines reward websites that pair strong optimization with engagement and brand-building. Using effective digital marketing tactics can boost your authority and significantly improve overall search performance.

Some of the most influential factors include:

Relevance to the Query

Search engines analyze how closely your content matches the user’s intent and keywords — both literal and contextual.

Page Quality

Clear structure, original insights, depth, accuracy, and value contribute to stronger rankings.

Backlinks & Authority

Quality backlinks act as votes of trust. Authoritative sites typically rank higher.

E-E-A-T

Experience, Expertise, Authoritativeness, and Trustworthiness are essential, especially for YMYL (Your Money, Your Life) topics. Strong E-E-A-T signals build credibility.

Page Speed & Core Web Vitals

Fast, stable pages provide better user experience, which search engines reward.

Mobile-Friendliness

With most searches happening on mobile, responsive design is mandatory for good rankings.

User Engagement Signals

Interactions like click-through rate (CTR) and dwell time indicate whether users find the content helpful.

Search engines combine these signals to determine which page offers the best possible experience.

3. How Search Engines Match Intent

Matching search intent is one of the most important ranking factors today. Search engines classify queries into several types:

Informational

The user wants information (e.g., “how search engines work”).

Navigational

They want a specific site (e.g., “YouTube login”).

Transactional

They intend to buy or complete an action (e.g., “best laptop deals”).

Using advanced query analysis and NLP, algorithms interpret the meaning behind a search, not just the keywords. This is where semantic search becomes crucial search engines consider concepts, context, and entities, not just exact matches.

Pages that best satisfy the intent behind a query naturally rank higher.

4. Personalization & Context

Search engines also tailor results based on user-specific factors, ensuring results are relevant within a personal context:

Location

Local searches (e.g., “pizza near me”) change based on where you are.

Device

Mobile users see mobile-optimized results prioritized.

Past Behavior & Search History

Search engines adjust rankings based on what you’ve clicked or searched before.

Language & Region

Content may vary depending on regional preferences or language settings.

Personalization ensures search results are more accurate and user-focused.

In summary:

Search engine algorithms use a combination of relevance, quality, authority, user experience, and E-E-A-T to decide rankings. By understanding ranking factors, adapting to core updates, and aligning content with search intent, you can dramatically improve your visibility and compete effectively on the SERPs.

How Machine Learning & AI Are Changing Search

The world of search is undergoing its biggest transformation ever, driven by AI in search and machine learning technologies. Modern machine learning search engines no longer rely solely on keywords, they analyze context, user behavior, entities, and intent in ways that mimic human understanding. As AI evolves, search becomes more intuitive, personalized, and accurate.

1. Google’s AI Models (Overview)

Google has introduced several powerful AI models that reshape how queries and content are interpreted:

RankBrain

Launched in 2015, RankBrain was Google’s first machine learning model. It helps interpret ambiguous or unfamiliar queries by understanding patterns and relationships between words. RankBrain marked the beginning of AI-driven ranking.

BERT

BERT (Bidirectional Encoder Representations from Transformers) allows Google to understand natural language more like humans do. It reads sentences in both directions to grasp:

Context

Meaning

Relationships between words

This significantly improved the accuracy of NLP search, especially for conversational queries.

MUM (Multitask Unified Model)

MUM can understand information across different languages, formats, and contexts.

It can analyze:

Text

Images

Videos

Audio

MUM helps answer complex, multi-step queries by drawing from diverse sources.

The Gemini Era (Future-Focused)

Google is moving toward a new generation of AI-powered search assistants with models like Gemini, designed to understand deeper context, generate content, and provide real-time reasoning. This marks a shift toward more interactive, conversational search experiences.

2. How AI Improves Search Understanding

AI dramatically enhances how search engines interpret queries and content.

Natural Language Processing (NLP)

Modern search engines understand:

Sentence structure

Intent behind queries

Synonyms and variations

Complex or conversational language

This means users no longer need to type exact keywords; the engine understands what they mean.

Better Understanding of Context and Entities

AI identifies:

People

Places

Concepts

Brands

Relationships between entities

This helps search engines deliver more precise answers and populate features like Knowledge Panels and featured snippets.

AI also reduces errors in interpreting ambiguous queries for example, differentiating Apple (company) from apple (fruit) based on context.

3. AI and the Future of Search

AI is reshaping not just how search engines understand content but how they deliver results.

Zero-Click Results

More queries are answered directly on the SERP through snippets, panels, and AI-generated summaries. Users often don’t need to visit websites for quick answers.

AI Overviews

Google’s AI Overviews provide detailed, synthesized responses at the top of the results page. These insights pull information from multiple sources and present it in a conversational, easy-to-read format.

Predictive Search

AI anticipates user needs by analyzing:

Search patterns

Location

Previous queries

Behavioral signals

This powers features like autocomplete, suggested queries, and personalized recommendations.

In summary:

Artificial intelligence is transforming the core of how search works. From RankBrain to BERT, MUM, and the upcoming Gemini era, AI in search is making engines smarter, more contextual, and more user-focused. As machine learning search engines evolve, SEO strategies must adapt by emphasizing semantic content, entities, and high-value user experiences.

Technical SEO and Its Role in the Search Engine Process

Technical SEO forms the foundation of a high-performing website. While great content and backlinks matter, they only work when search engines can efficiently crawl, render, and index your site. Technical SEO ensures that search engines can access your content without obstacles, making it a critical part of the overall search engine process.

1. Why Technical SEO Matters

Technical SEO is what enables smooth crawling and indexing, the essential first steps before a page can rank. If search engines struggle to navigate your website due to slow loading, blocked resources, or poor structure your content may never reach its full ranking potential.

Good technical SEO ensures:

Crawlers can easily discover all important pages

Your content is properly processed and understood

Duplicate or unnecessary URLs don’t confuse search engines

Users enjoy fast, reliable page performance

Without a strong technical foundation, even the best content may fail to appear in search results.

2. Key Technical SEO Elements

Search engines rely on specific technical signals to understand and evaluate your website. The most important components include:

Site Architecture

A clear site structure helps search engines and users navigate easily. Strong menus and internal links improve crawlability. If you’re starting a site, this guide on how to build a WordPress website without coding helps you set up an SEO-friendly structure.

URL Structure

Short, descriptive URLs improve clarity for both users and search engines. Clean URLs avoid parameters and unnecessary complexity.

SSL/HTTPS

Secure websites (HTTPS) protect user data and build trust. Google considers HTTPS a ranking signal, making it essential for SEO.

XML Sitemaps

An XML sitemap guides crawlers by listing your important pages. It improves discoverability, especially for large or deep websites.

Structured Data / Schema Markup

Structured data helps search engines understand context, meaning, and content types. Schema markup enables features like:

Rich snippets

FAQs

Reviews

Product data

This improves visibility and click-through rates.

Canonicals

Canonical tags prevent duplicate content by telling search engines which version of a URL is the primary one. This protects ranking signals from being diluted across similar pages.

Redirects

Proper use of redirects (especially 301 redirects) helps preserve link equity when moving or deleting pages. Mismanaged redirects can harm both crawling and user experience.

3. Common Technical SEO Issues

Even well-built sites encounter technical issues that can negatively impact rankings. Some of the most common include:

Duplicate Content

Multiple pages with identical or near-identical content confuse search engines and split ranking authority.

Orphan Pages

Pages with no internal links pointing to them are almost invisible to crawlers. Since bots depend on links, orphan pages rarely get indexed.

Slow Loading Pages

Slow pages create poor user experience and limit crawling efficiency. They also negatively affect Core Web Vitals and overall SEO performance.

JavaScript Rendering Issues

If key content loads only via JavaScript and isn’t properly rendered, search engines may not see or index it. Server-side rendering or dynamic rendering often solves this problem.

In summary:

Technical SEO is the backbone of successful search performance. With strong site architecture, optimized URLs, secure HTTPS, structured data, and clean sitemaps, your website becomes easier for search engines to crawl and understand. By avoiding common technical pitfalls, you strengthen your indexability, improve user experience, and create a solid platform for long-term SEO success.

How to Optimize Your Site for Better Crawl, Index & Rank

Improving your site’s visibility in search engines requires a strategic mix of SEO optimization, technical structure, content quality, and user experience. To achieve strong performance, you must support all three phases of the search engine process: crawling, indexing, and ranking. The following steps outline exactly how to enhance your site’s crawlability, indexability, and ranking power.

1. Improving Crawlability

Search engines need to efficiently discover your pages before they can index or rank them. Better crawlability means bots can navigate your site with ease.

Clean URL Structure

Use simple, descriptive URLs that include relevant keywords and avoid unnecessary parameters. Clean URLs help both users and bots understand your content at a glance.

Fix Broken Links

Broken links (404 errors) disrupt crawling pathways. Regularly audit your site for broken internal and external links to ensure search engine bots can follow every path without interruption.

Optimize Robots.txt

Your robots.txt file should guide crawlers, not block important pages.

Use it to:

Allow crawling of essential content

Block irrelevant or sensitive areas (like admin directories)

Avoid accidentally disallowing key pages

A well-configured robots.txt improves crawl efficiency.

Submit XML Sitemap

Submitting a sitemap through Google Search Console ensures search engines know which pages you want crawled. This is especially important for:

Large websites

New websites

Sites with deep architecture or many updates

A sitemap acts as a roadmap for search engines and boosts overall discoverability.

2. Improving Indexability

Even if a page is crawled, it may not be indexed unless it demonstrates value, clarity, and proper structure. To improve indexability, focus on both content quality and technical signals.

High-Quality, Original Content

Search engines prioritize unique, helpful, and well-written content. Avoid thin, duplicate, or keyword-stuffed pages. Pages offering genuine value are far more likely to be indexed.

Proper Tagging (Title, H1, Alt, Meta)

Clear HTML signals help search engines understand your topic:

Title tags communicate the main idea

H1s structure the content

Alt text describes images

Meta descriptions improve visibility and click-through

Good tagging improves both indexing and relevance.

Strengthen Internal Linking

Internal links guide crawlers through your website and distribute authority across pages. Key pages should have:

Plenty of relevant internal links

Clear anchor text

Logical placement within the site structure

Strong internal linking significantly enhances indexability.

3. Improving Ranking

Once your site is crawlable and indexable, the next step is achieving strong positions on the SERP. Ranking depends on relevance, quality, authority, and user satisfaction. These ranking improvements are essential for long-term SEO success.

Satisfy User Intent

Every page must match what the user is truly looking for whether it’s information, a product, a comparison, or a tutorial. Aligning content with intent is one of the strongest ranking signals today.

Build Quality Backlinks

Backlinks from trustworthy websites increase your authority and credibility. Search engines treat high-quality backlinks as endorsements, helping your pages rank higher.

Increase E-E-A-T Signals

Showcase:

Real expertise

Author credentials

Transparent sources

Trust-building elements ( HTTPS, privacy policy, reviews, citations )

Improving E-E-A-T boosts ranking potential, especially for competitive or sensitive topics.

Optimize Page Experience

Focus on:

Fast loading times

Mobile responsiveness

Smooth navigation

Strong Core Web Vitals

Clean layouts

A better user experience leads to longer dwell time, lower bounce rates, and higher rankings.

In summary:

Effective SEO optimization requires supporting every stage of the search engine process. By enhancing crawlability, strengthening indexability, and improving your ranking signals, you create a website that performs better in search and delivers a more valuable experience for users. These combined improvements are essential for long-term SEO growth and visibility.

Advanced Concepts (Optional for Deep Technical Guide)

As search engines evolve, they rely on more advanced systems than simple keyword matching. Modern search now involves vector search, semantic indexing, passage ranking, better handling of JavaScript, and sophisticated spam detection. Understanding these concepts gives you a deeper view of how search really works under the hood.

1. Vector Search & Semantic Indexing

Traditional search engines used to rely heavily on exact keyword matches. Modern engines, however, increasingly use vector search and semantic indexing.

In vector search, queries and documents are converted into numerical vectors (embeddings) that capture meaning, not just words.

Instead of asking “Do the words match?” the system asks “Are these ideas similar?”

This allows search engines to:

Understand synonyms and related phrases

Return relevant results even when the query doesn’t contain the exact keywords

Better handle natural, conversational language

Semantic indexing organizes content by concepts, topics, and entities, rather than only by specific words. For SEO, this means:

Writing topic-focused, in-depth content

Covering related questions, entities, and use cases

Thinking in terms of topics and intent, not just keyword density

2. Passage Ranking

With passage ranking, search engines don’t just look at your page as a whole they evaluate individual sections or passages of your content.

Instead of only ranking full pages, search engines can:

Identify a specific paragraph that answers a query

Surface that passage as a featured result

Rank a long article for multiple, very specific questions

This rewards pages that:

Are well-structured with clear headings

Answer questions directly and concisely within sections

Use logical formatting (H2s, H3s, bullet points, FAQs)

For SEO, it’s smart to:

Break content into clear, focused sections

Answer “People Also Ask” style questions within your article

Use descriptive subheadings that match real search queries

3. JavaScript SEO Challenges

Modern websites often rely heavily on JavaScript for rendering content, navigation, and user interactions. While this can improve UX, it can also create SEO challenges:

Search engine bots may not fully render or execute JavaScript

Important content may not appear in the initial HTML

Links and text loaded dynamically might be invisible to crawlers

Common JavaScript SEO issues include:

Content only visible after user actions (clicks, scrolling, etc.)

Client-side rendering without fallback HTML

Lazy-loaded elements that aren’t exposed to crawlers

To improve JavaScript SEO:

Use server-side rendering (SSR) or hybrid rendering where possible

Ensure critical content is present in the HTML source

Test pages with tools like “Inspect URL” / “View Crawled Page” in Search Console

The goal is to make your site look just as understandable to bots as it is to users.

4. How Search Engines Handle Spam

Search engines devote huge resources to fighting spam, because spammy results destroy user trust. Spam includes:

Keyword stuffing

Hidden text or cloaking

Link schemes and paid link networks

Auto-generated or scraped content

Malicious or deceptive pages

To combat this, search engines use:

Spam-detection algorithms that analyze patterns across billions of sites

Manual actions from human reviewers for extreme cases

Regular spam updates that target new manipulative tactics

When a site is flagged as spam:

Its rankings may drop dramatically

Specific pages may be deindexed

In severe cases, the entire domain can be removed from results

For long-term SEO, the lesson is simple:

Focus on genuine value, clean tactics, and sustainable strategies. Trying to “game” the system with spam almost always leads to penalties sooner or later.

Conclusion

Understanding how search engines work through the three core stages of crawling → indexing → ranking—is essential for anyone looking to improve their online visibility. Crawling ensures search engines can find your pages, indexing allows them to understand your content, and ranking determines where your pages appear in search results.

When you understand these mechanics, SEO becomes far more strategic. You know how to structure your site, optimize content, fix technical issues, and match search intent in a way that aligns with how search engines actually operate. This knowledge turns guesswork into informed decision-making.

Now that you understand the full search engine process, it’s time to take action. Strengthen your technical foundation, create high-quality content, improve internal linking, and focus on user experience. Every improvement you make helps search engines crawl, index, and rank your site more effectively bringing you closer to the visibility and success you’re aiming for.

FAQs

How long does it take to index a page?

Most pages are indexed within a few hours to a few days, depending on website authority and crawl frequency. Using sitemaps, strong internal linking, and proper technical SEO can speed up the process.

Why does my page not appear on Google?

Your page may not appear on Google if it hasn’t been indexed, has technical errors, lacks quality content, or is blocked by robots.txt. Low authority or thin content can also slow visibility in search results.

Do search engines treat AI content differently?

Search engines do not penalize AI content solely for being AI-generated. However, they prioritize helpful, original, high-quality content so AI writing must offer real value, accuracy, and clarity to rank well.

What is the difference between crawling and indexing?

Crawling is when search engines scan your website to discover pages and content. Indexing is when those pages are stored in Google’s database and made eligible to appear in search results. Both steps are essential for visibility.